Pushing Design’s Edges with Sound Interactions

Jessica Barness

School of Visual Communication Design

Kent State University

ABSTRACT

Sound is temporal, social, invisible, and physical. Though it is difficult to deny that sound has long been part of design, it has not held a prominent place in our discourse. Designers have been attracted to sound for some time, and digital work over the past couple of decades shows our visceral tendencies toward audial experimentation. While design education has historically focused on visual communication, the ways we might create, select, and remix sound can positively influence a holistic approach to projects. As an integral part of digital and physical environments, it transforms the way we might speak to an audience and plays a role in shaping human experiences.

This paper seeks to locate the relationship between sound and design, and calls for further investigation. What does sound mean to designers, and where does it reside in relation to what we already know? How can we use this invisible medium to advance opportunities and solve complex problems? These questions, situated at the edges of our visual knowledge base, push toward embracing audial content in the design of interactions. This investigation begins with the historical connections between vision and sound in design, and continues in two parts: 1) a concept analysis of sound in scholarly design literature and 2) student prototypes using sound from a course that brings together interaction design, visual communication, and physical environments.

BACKGROUND

Sound is a thing, an action, a mediator, and a feeling. It’s an integral part of everyday life and communicates in ways that visual or tactile experiences cannot. We listen intuitively and make sounds expressively. These properties of sound do not require research to comprehend. Sound seems like a natural fit when designing for interaction and communication, yet design education focuses on visual media and strategies. This project began as an effort to identify the role of sound as a concept and medium in design, understand how it has been used, and consider what we might do with it in the future.

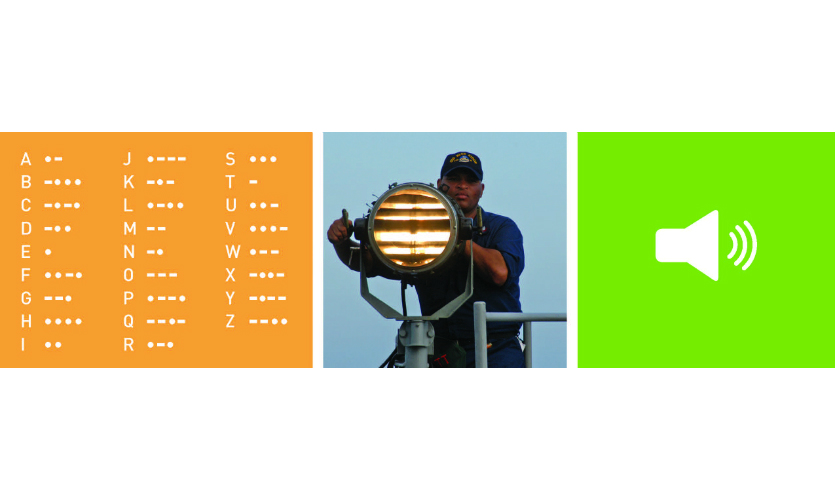

Historically, vision and sound have connected through typography (as visualized language) and Morse code, and have advanced through technology. The symbol-to-sound mapping of ancient Greek and Phoenician writing systems led to the modern Latin alphabet, and is represented in modern typeface designs. Morse code (Figure 1), invented in 1832 by artist and president of the National Academy of Design, Samuel F. B. Morse, facilitated rapid communication over long distances and could be read, seen, heard, written, and spoken. Morse’s designed code functions as a temporary stand-in for the alphabet during its transmission via telegraph. Sending and receiving is not a matter of hearing dashes and dots, but understanding a rhythm— like collage (vision) and music (sound), the whole is different from the sum of its individual parts. 1

Technology is often what allows the use of a particular medium to advance. Morse code was originally tied to the electrical telegraph, and later transmitted by radio (sound) or light sources such as the Aldis lamp (vision); the art of typography was enabled by movable type on a printing press and, later, flourished with computer technology. Typographic notation systems, as a means to shape and preserve meaning for letters and sounds, were revolutionized almost concurrently. In 1957, Max Mathews wrote the computer program Music at Bell Laboratories, and was the first to digitize speech and music. Soon after, Ivan Sutherland’s Sketchpad program at MIT was a breakthrough in human-computer interaction wherein “the computer will be able to assist a user not only in arriving at a nice looking drawing, but also in arriving at a sound design” (Sutherland 1963). 2 While Mathews was primarily focused on digital sound, he also helped pioneer computer typesetting (Mathews & Miller 1965). Graphic 1, an environment developed to compose Mathews’ digital music with a light-pen on a screen, was developed at Bell Labs in 1968, introducing “the concept of interactive, real-time composition on a computer screen with cut-and-paste capabilities, years before personal computers would make this functionality commonplace.” (Holmes 2005, p. 275).

These computing technologies may be considered the precursors to our current graphic and audio software systems, which are readily available for research, education, and practice. If sound can be digitally shaped like visual media, how might designers embrace this new-old territory for interaction and communication?

METHODOLOGY

The goals of this project were to locate sound in design through documented research as well as more intuitive making and designing. Looking at both provides a more inclusive understanding of how designers are working with sound. Two parallel methods were used: 1) a concept analysis of design literature and 2) design student prototyping. My intention was to locate thematic clusters of sound in design, not define its use in absolute terms.

Understanding a concept within a profession is critical to linking theory, research, and practice. Analyzing and clarifying the concept is part of developing this knowledge. To better understand the use of sound in design, concept analysis (Walker & Avant 2005) was completed to uncover scholarly use of the term. This required gathering scholarly articles and examining the concept of sound within each; it also served as a type of focused, preliminary literature review. It should be noted that a concept analysis is interpretive and affected by the language of a profession, and the researcher influences conclusions. There were two criteria for selecting articles. First, the article must have been published in a peer-reviewed design or design-related new media journal. Second, the articles needed to have the word “sound” (or a suffixed variation) in their titles, indicating the authors’ level of engagement with the concept. Of the 11 periodicals selected for this research, six journals contained articles that met the criteria and 28 articles were analyzed (Table 1). 3

The concept analysis followed an example on the concept of creativity (Pederson & Burton 2005), and consisted of the following steps: 1) find the concept within the articles, 2) describe its contextual usage, and 3) determine antecedents and consequences. This process also included construction of model cases for the concept’s use. However, because my goal was to identify a variety of uses of sound in design, and not a single definition, this was not considered useful and was omitted from the analysis. A spreadsheet was used to track the data, and instances of sound were located within each article and highlighted. Implicit and explicit attributes contextualizing sound were recorded.

In contrast with existing peer-reviewed research related to sound, student creative work provides an alternative, intuitive means to examine role(s) of sound in practice. Student work from an advanced, special-topics interaction design course provided insight on sound within a visual communication design program. The students were not aware of the concept analysis, nor were my findings brought into the classroom. In this particular course, prototyping holds a primary focus. Students are tasked with imagining user-centered products and systems to connect people with digital and physical spaces; this is a studio community that encourages looking at both existing and futuristic possibilities. It is critical, therefore, that students understand the importance of communicating complex ideas to colleagues, developers, manufacturers, stakeholders, and the public. In the first two of three assigned projects, students were not instructed to work specifically with sound. Instead, their use of technologies and media were allowed to emerge as a response to unframed problems.

Each of the assignments followed a prototyping cycle of research and ideation, making, testing, and refining. Final solutions were presented as contextual narratives, such as clickable interfaces, video demonstrations, or a built environment. The first project focused on personal identity and everyday problems, and students conducted primary research in the form of interviews to understand daily stress before developing a helpful product or system. In the second project, students formed teams of three to design a system of digital interaction to connect people with a physical environment; this required secondary research on places, technologies, and social issues. Finally, the third project was a designed environment, in the form of a collaborative exhibition. Interaction design students teamed with retail environment design and museum studies students to explore multimodality. 4 Each team focused on one mode of interaction: sound, movement, text, or touch. The topic of the exhibition was predetermined and students were responsible for concepts, designs, and installation.

CONTRIBUTION TO THE FIELD

In the peer-reviewed design literature, a total of 163 attributes were found, both implicit and explicit. The majority of attributes appeared just once or twice across the literature; however, a small number were repeated between three and 12 times. These were considered to be prevalent, interrelated attributes (Table 2), and could be organized into four thematic clusters: Technology, Perception, Language, and Shape (Figure 2).

Theme: Technology

Researchers frequently addressed the means to transmit, generate, and use sound. Technological means and devices influence communication systems, whereas “sound technology was modeled on vision” but now “visual technology is increasingly modeled on hearing” (van Leeuwen 2007). Researchers mentioned the need to explore or control new sound technology, including implications of cinematic sound (Faden 1999, Langkjær 1997, Hayward 1999); challenges in interactive media design education (Yantaç & Özcan 2006); opportunities with sound poetry in multi-, hyper- and inter-media (Menezes 2001); and digitizing speech (Costa 2001). Sound technologies have social and cultural effects; as mobile devices, they produce “forms of sociality: the reshaping of public and private space, modes of conduct, moral orders, and patterns of interaction” (Farnsworth & Austrin 2005) while digital technology provides a way for audio/visual sound design to reach the public (Potts 1997). The recording and preservation of sound, and its conversion from analog to digital media, was noted in regard to “cultural context and mode of production… which influence how the recorded sounds are heard” (Stakelon 2009). The systematic consideration of sound is necessary for producing new artificial sounds (Polotti & Lemaitre 2013) and for exploring digital synthesis (Valsamakis & Miranda 2005). Adherence to “new forms for new technologies” in Herbert Bayer’s phonetic type design (Burnett 1990) relates to creating “new ways not only to read and see the world but also to hear it” and has limitations, ethical and legal issues (Neuenfeldt 1997). Technology, in connection with sound, may be digital or analog and has socio-cultural implications.

Theme: Perception

Sound as a ubiquitous part of the senses and meaning-making was referenced by many scholars. Psychoacoustics may “precede emotional responses and power judgments” (Özcan & van Egmond 2012) while resonance and immersion form a significant part of creating and engaging with sound-based work (Cabrera 1997, Alarcón 2013). Sound involves and connects us in the world (van Leeuwen 2007) and is part of human sensory experience and expression (Polkinhorn 2001, Waterman 1989, Szkárosi 2001). Working with sound-intensive media was discussed in the context of user-centered design (Yantaç & Özcan 2006, Polotti & Lemaitre 2013; Özcan & van Egmond 2012), and interfaces for persons with sensory or physical impairments (Gärdenfors 2013, Williams et al. 2007, Azeredo 2007). Spatial, mental constructions of sound are connected to materiality in physical environments (Fowler 2013) and through perceptions of time and distance (Langkjær 1997). However, sound as an “invisible, see-through space” (Behrendt 2012) is experienced differently from visual information when “we see and feel its effects but ignore it phenomenologically” (Potts 1997), though it “opens up the potential for new, often unanticipated networks of connection” (Farnsworth & Austrin 2005). Designer and listener share the perception of sound.

Theme: Shape

Making with or through sound was referenced by scholars in connection with designing (Yantaç & Özcan 2006, Burnett 1990, Gärdenfors 2003, Potts 1997); recording, selecting, and creating (Alarcón 2013); composing (Azaredo 2007, Valsamakis & Miranda 2005); scoring (Hayward 1999, Faden 1999); and visualizing (Fowler 2013, Langkaer 1997, Szkarosi 2001). The merging of sound with other media is an imaginative activity: in design education, students were instructed to “conceptualize sound in graphic terms” (Fowler 2013) while recognizing that “the basis of multimedia design is not solely visual, the ideal combination being visual, sound and text” (Yantaç & Özcan 2006). This idea of synthesis is furthered through the “mediation and integration of music into multimedia environments” (Neuenfeldt 1997) and sound becoming “an integrated part of theories of audio-visual media in historical, technical and aesthetic contexts” (Langkaer 1997). As a means for composing, sound combined with other media is described as assemblage (Farnsworth & Austrin 2005) or as signs within a system of collage, where “the association between them is due to the fact that they are present in the same space” (Menezes 2001). To shape sound is to form it or to otherwise determine its nature alongside visual media.

Theme: Language

Researchers also connected sound to speaking, reading, writing, and hearing, with earlier research devoted to phonetics and spelling (Frith 1978; Baron & Hodge 1978) and more recent research focused on sound poetry. 5 The relationship between sound and image was frequently referred to, as “the music of language” (Polkinhorn 2001) originating by voice or technology (Menezas 2001, Szkárosi 2001); type as “the visual expression of sound” through form (Burnett 1990) as well as meaningful silence (Waterman 1989). Connections with writing to describe sound (Fowler 2013) and consideration of visual texts as “a sort of orchestral ‘score’ for a sound text” (Scholz 2001) bring sound representation into two-dimensional spaces. The performance of sound (Costa 2001, Valsamakis 2005) relates to its real-time characteristics as language to listen to. Language concerns our use of words, as speech, text, and voice.

Concept antecedents and consequences

Relating outcomes of the concept analysis to design practice, antecedents were interpreted as problems or opportunities, and consequences as solutions or products. Antecedents precede the use of sound, and are conditions that provide a foundation for the concept to occur. These would include digital technology, needs for physical or sensory impairments, social inquiry, or creative expression. Consequences are outcomes that take place as a result of sound, and could be tangible or conceptual. Examples include digital interfaces, multi-sensory feedback environments, and new forms of sound-centric experiences.

Prototypes

From the interaction design course, three demonstrative examples of designing with—and through—sound emerged. A solution to the first interaction design assignment, ParkSmart, is a mobile app for navigating campus parking within an automobile (Figure 3). The system consists of a user interface for a smartphone and an RFID tag on the car that connects to a campus parking infrastructure. Because the app would be used while driving, it needed to be hands-free and distraction-free. To show how ParkSmart works, students created images and a video walkthrough to situate the project within a vehicle and demonstrate the app’s voice-based interaction. Digital speech was recorded to demo the audio coming from the app, and the inclusion of a human voice recording (“yeah”) reveals the simple feedback required by the driver. The user interface contains minimal visual information, becoming a secondary element to the sound capabilities. An animated sound wave provides a visual cue for the audio.

Inertia (Figure 4) a digitally interactive fitness center system, is a student team solution for the second course assignment. The students recognized that workouts can be difficult, confusing, or intimidating, and their solution guides individual workouts and facilitates social connections. The Inertia experience needed to operate hands-free and allow gym members unencumbered movement during their workouts. The students proposed a motion-sensing input system within the gym that would connect with smart, wireless earbuds and a mobile app to guide workouts and track performance and vitals while also allowing communication. 6 The technology “speaks” to gym members through the earbuds; this voice—dubbed an “Echo”—guides a personalized workout and connects with others at the gym for friendly competition. Pitching this concept, however, required prototyping a fictional workout with special attention to audio. The students scripted and produced a video of two fictional Inertia gym members and their Echoes, allowing us to listen in on both sets of earphones to understand the system’s capabilities. One of the challenges the students encountered was the hierarchy of sound and image. We needed to be able to see the physical activity, but also make sense of the earbud’s audio input and exchange. By using a color overlay to slightly obscure the visuals, along with two distinct voices, the students directed our attention toward listening. Reading text on screen acts as a support system for understanding the integrated system.

Finally, as part of an experimental environment, What’s Real: Investigating Multimodality, a student team conceptualized, designed, and built an exhibit using sound as the primary mode of interaction for visitors on the subject of “top hat.” The interaction design students on the sound team used existing technologies to invite users to experience top hats—primarily understood as a tactile, visual object—through audio content. Three core digital interfaces were designed and developed as high-fidelity prototypes. The audio content consisted primarily of top hat pop culture sound clips, referencing characters or people who wore them. An iPod listening station (Figure 5) contains an audio recording of Abraham Lincoln’s Gettysburg Address, read by one of the students; an interactive quiz game (Figure 6) invites visitors to recognize spoken-word clips from pop culture icons related to top hats. In the center of the exhibit, two iPads situated in front of a visual projection contain a sound-mixing DJ/beatbox application (Figure 7); the projection visualization responds to audio changes. These tablet interfaces invite users to select a music track, then overlay it with audio clips related to top hats. The listening station and interactive quiz were designed for individuals, and the beatbox invited social interaction. The exhibit is inviting and accessible; text is available for users unable to listen. All three pieces in the exhibit were the result of recording, sampling, visualizing, and designing sound for a digital and physical learning space.

IMPLICATIONS FOR THEORY AND PRACTICE

The four concept themes (Technology, Perception, Shape, and Language) and the various attributes within each form the beginnings of a framework to understand sound as a theory, medium, and expression in design. The concept analysis shows that research on sound exists within the scholarship, but is limited (not surprising in a primarily visual discipline). This also suggests that perhaps designers work intuitively with sound, and that a need for more scholarly investigation has simply not been identified. Current publication practices, however, make it difficult to “show” sound in our documented research; experiencing sound in design research currently requires written descriptions, showing static images, and/or linking to an online source.

Sound in student prototypes is integrated with visual information and crosses through all four themes from the concept analysis, indicating sound is not perceived in singular, isolated terms. Designers are using sound beyond functional communication; their works are intended for a user or audience, and combined with graphic and interactive media, have the capability to address logical, ethical, and emotional aspects of experience. When bringing sound into design, understanding visual and audial hierarchical relationships is critical. In the student work discussed here, sound required careful consideration of content, digital audio technologies, context, and perhaps more important, what it expresses and what it may mean to the listener.

To employ sound as an ingredient in designed experiences is to remediate it, and fluency in designing with sound is as valuable as knowing how to use shape, color, and type. Sound in prototyping can help communicate ideas to people involved with development: colleagues, stakeholders, programmers, engineers, fabricators, etc. Creative research with sound may also dive deeper into “what might be” and consider alternative ways sound can be brought into designed communication. We might also look at designing for the absence of sound, or study speaking and hearing as part of designed interactions.

This investigation presents more questions than answers, and as the concept analysis results suggest, there is ample opportunity for more inquiry on this topic. Should we continue to work with sound intuitively, or do we need guiding principles for discourse and practice? How will it add value to our work? Design researchers may further develop sound studies and theory specifically related to visual and interactive design. As we better understand how sound fits with our visual expertise, we can create more engaging human experiences and push the edges of our discipline into new territory.

About the author

Jessica Barness is an assistant professor in the School of Visual Communication Design at Kent State University. She has an MFA in design from the University of Minnesota and an MA in studio art from the University of Northern Iowa. Previously, she worked as a graphic designer with Conway+Schulte Architects in Minneapolis and as a senior product designer in Chicago. Barness’ research through design, on topics related to social identity, language and interactive media, has been exhibited and published internationally.

Endnotes

- Alvin Huether (former WWII radioman) in discussion with the author, December 2011.

- An interesting choice of words, but Sutherland was most likely referring to sound as “in good condition” rather than audio-based media.

- The following journals were searched: Convergence, Design and Culture, Design Issues, Design Studies, Digital Creativity, Information Design Journal, International Journal of Design, Iridescent, Journal of Design History, Visible Language, and Visual Communication.

- For an explanation of multimodality, see Jeff Bezemer “What is multimodality?” MODE: Multimodal Methodologies. February 16, 2012. http://mode.ioe.ac.uk/2012/02/16/what-is-multimodality/.

- Special issue of journal Visible Language 35, no. 1 (2001).

- Inertia would use technology such as Microsoft’s Kinect 2.0 system (www.microsoft.com, 2014) and Dash wireless earphones by Bragi (www.bragi.com, 2014).

References

- Alarcón Z. Creating sounding underground. Digital Creativity 24, no. 3 (2013):252-8.

- Azeredo M. Real-time composition of image and sound in the (re)habilitation of children with special needs: a case study of a child with cerebral palsy. Digital Creativity 18, no. 2 (2007):115-20.

- Baron J, Hodge J. Using spelling-sound correspondences without trying to learn them. Visible Language 12, no. 1 (1978):55-70.

- Behrendt F. The sound of locative media. Convergence 18, no. 3 (2012):283-95.

- Burnett K. Communication with visual sound: Herbert Bayer and the design of type. Visible Language 24, no. 3 (1990):298-333.

- Cabrera D. Resonating sound art and the aesthetics of room resonance. Convergence 3, no. 4 (1997):108-37.

- Costa M. The word of poetry, sounds of the voice and technology. Visible Language 35, no. 1 (2001):6-11.

- Faden E. Assimilating new technologies early cinema, sound, and computer imagery. Convergence 5, no. 2 (1999):51-79.

- Farnsworth J, Austrin T. Assembling portable talk and mobile worlds: sound technologies and mobile social networks. Convergence 11, no. 2 (2005):14-22.

- Fowler M. Soundscape as a design strategy for landscape architectural praxis. Design Studies 34, no. 1 (2013):111-28.

- Frith U. From print to meaning and from print to sound. Visible Language 12, no. 1 (1978): 43-54.

- Gärdenfors D. Designing sound-based computer games. Digital Creativity 14, no. 2 (2003): 111-14.

- Hayward P. Inter-planetary soundclash: music, technology and territorialisation in Mars attacks!” Convergence 5, no. 1 (1999):47-58.

- Holmes T. Electronic and experimental music: technology, music, and culture. Oxford, UK: Routledge, 2008.

- Langkjær B. Spatial perception and technologies of cinema sound. Convergence 3, no. 4 (1997):92-107.

- Mathews M, Miller J. Computer editing, typesetting and image generation.” Proceedings of the Fall Joint Computer Conference (1965):389-98.

- Menezes P. Experimental poetics based on sound poetry today. Visible Language 35, no. 1 (2001):64-75.

- Neuenfeldt K. The sounds of Microsoft: the cultural production of music on CD-ROMs. Convergence 3, no. 4 (1997):54-71.

- Özcan E, van Egmond R. Basic semantics of product sounds. International Journal of Design 6, no. 2 (2012):41-54.

- Pedersen E, Burton K. A concept analysis of creativity: uses of creativity in selected design journals. Journal of Interior Design 35 (2009):15–32.

- Polkinhorn H. True heritage: the sound image in experimental poetry. Visible Language 35, no. 1 (2001):12-19.

- Polotti P, Lemaitre G. Rhetorical strategies for sound design and auditory display: a case study. International Journal of Design 7, no. 2 (2013):67-82.

- Potts J. Is there a sound culture? Convergence 3, no. 4 (1997):10-14.

- Scholz C. Relations between sound poetry and visual poetry, the path from the optophonetic poem to the multimedia text. Visible Language 35, no. 1 (2001):92-103.

- Stakelon P. A sound that never sounded: the historical construction of sound fidelity. Convergence 15, no. 3(2009):299-313.

- Sutherland I. Sketchpad: a man-machine graphical communication system. Proceedings of the Spring Joint Computer Conference (1963):329-46.

- Szkárosi E. A soundscape of contemporary Hungarian poetry.” Visible Language 35, no. 1 (2001):48-63.

- Valsamakis N, Miranda ER. Iterative sound synthesis by means of cross-coupled digital oscillators. Digital Creativity 16, no. 2 (2005):90-8.

- van Leeuwen T. Sound and vision. Visual Communication 6, no. 2 (2007):136-45.

- Walker LO, Avant KC. Strategies for theory construction in nursing. Upper Saddle River, N.J.: Pearson Prentice Hall, 2005.

- Waterman A. Soundings along the lines. Visible Language 23, no 1 (1989):123-32.

- Williams C, Petersson E, Brooks T. The Picturing Sound multisensory environment: an overview as entity of phenomenon. Digital Creativity 18, no. 2 (2007):106-14.

- Yantaç AE, Özcan O. The effects of the sound-image relationship within sound education for interactive media design. Digital Creativity 17, no. 2 (2006):91-9.

Figures

- Morse code was designed as a way to communicate text. It can be read, seen, and heard. Audio clip

- Sound themes from concept analysis (Source: Jessica Barness, 2014)

- ParkSmart is a hands-free mobile app with voice interaction. (Source: Daniel Echeverri, Kent State University, 2014)

- Inertia is an interactive fitness gym system with motion-sensing input and smart earphones. Top: Proposed interior environment showing Kinect hardware on walls and Dash earphones in foreground (left); screen shot from video of Inertia workout (right). Bottom: Detail of user pathway highlighting parts of the Inertia workout. Video (Source: Alex Herbers, Jun Mao, and Alanah Timbrook, Kent State University, 2014)

- Interface details from the What’s Real? Investigating Multimodality museum exhibit designed and produced by students, focused on sound as a mode of interaction.

- Digital interfaces were designed by Joshua Bird, Daniel Echeverri, and Gina LaRocca, Kent State University, 2014)

- (Photos: Daniel Echeverri)

Tables

- Journals containing articles meeting criteria for the concept analysis, issues analyzed, and number of articles found in each. All journal archives were searched within online databases.

- Primary attributes for sound, occurring three times or more throughout the journals. These refer to the context in which sound is used.

Find more content in your areas of interest in SEGD’s Xplore Experiential Graphic Design index.